Scientific information about the toxicity of chemicals and radiation plays a central role in environmental protection. Key statutes that protect human health and ecology—the Clean Air Act, the Clean Water Act, the Safe Drinking Water Act, the Toxic Substances Control Act, the Federal Food, Drug, and Cosmetics Act, and the Federal Insecticide, Fungicide, and Rodenticide Act—demand scientifically credible data on the toxicity of pollutants and commercial products. These data are used for both setting regulatory standards and backing enforcement actions. Federal agencies’ ability to protect humans and the environment are dependent on this one-two combination. Laws and their implementing regulations provide authority to control hazards, and science provides the evidence about when to act.

Regulators usually rely on toxicity studies, especially those using animals, for this information. Many of these studies use rodents (purpose-bred mice and rats), but other species are also employed, including dogs, cats, rabbits, guinea pigs, hamsters, birds, fish, and non-human primates such as macaques. In particular, TSCA and FIFRA are data hungry, and many animal studies are conducted to satisfy their regulatory needs.

Toxicity studies using animals and the field of regulatory toxicology first came into widespread use in the early 1940s, when consumer and medical products led to several tragedies involving death or disfigurement. One of those products was an antibacterial drug designed for children called elixir of sulfanilamide. To enhance ease of administration, the manufacturer added a raspberry flavor to, and prepared a liquid version of, the drug. Because it is difficult to get sulfanilamide into liquid form, it was dissolved using 70 percent diethylene glycol, which is poisonous. More than 100 children lost their lives. A consumer product called Lash Lure also led to loss of life and blinding. Lash Lure was an aniline-based compound that was marketed to beauty parlors as a dye for eyebrows and eye lashes. When applied to human skin, aniline compounds can cause severe allergic responses, and at least one death and several cases of blindness were reported due to Lash Lure.

The loss of life and health for which these products were responsible led Congress to pass laws that required pre-market testing. The scientific community rose to the challenge and developed tests that could be used to evaluate drugs and consumer products. Later in the 20th century, when Congress began to pass environmental laws, legislators demanded pre-market testing of some new compounds (such as pesticides in FIFRA) and granted EPA the authority to require post-market testing of some compounds already on the market (such as in TSCA). This was regulatory toxicology 1.0.

Today, the need to test environmental chemicals for hazard and risk is much greater. A more nuanced and sophisticated view has emerged about how humans are impacted by the environment. Thanks in large part to the above laws, there are few, if any, immediate deaths or illnesses caused by exposure to environmental chemicals and radiation; however, there is strong evidence that chronic exposure to less-than-lethal levels of environmental chemicals damages health and diminishes quality of life. In addition, studies in human populations have shown that a combination of environmental exposures, plus lifestyle choices and factors such as active smoking, poor diet, stress, legal and illicit drug intake, can combine to degrade well-being and welfare.

Consequently, more data is needed on a greater number of chemicals and over a broader range of health endpoints. While many environmental risk assessments are focused on cancer, there is an increasing recognition that neurological endpoints such as Parkinson’s and Alzheimer’s disease, as well as cardiovascular diseases, are associated with environmental factors. Cumulative exposures to several contaminants when combined also create health problems that need to be studied. Unfortunately, modern data needs far outstrip data production. The current system of regulatory toxicology and related testing must evolve if it is to meet demand. This evolution must start with a fresh way of addressing data gaps and needs, acknowledging that a transition must be made, and charting a course for that evolution. To demonstrate progress made and the challenges that confront us, it is useful to examine in detail two studies from the National Academy of Sciences, Engineering, and Medicine published 16 years apart.

NASEM studies are frequently commissioned by federal agencies to shed light on difficult scientific issues. The study committees bring together experts who review the literature, take testimony from other experts, and prepare a consensus report. Of the two reports discussed below, the first was commissioned by EPA and the second was commissioned by the National Institutes of Health at the direction of Congress.

As amended in 2016, TSCA is the primary U.S. law that regulates chemicals in commerce. One of the major criticisms of the original TSCA was that it was not effective at generating the toxicological knowledge that EPA, citizens, and the business community needed to make decisions about the hazards of chemicals commonly manufactured. It has been estimated that of the approximately 85,000 chemicals in commerce, we have solid toxicological information on fewer than 1,000.

In 2007, in part to address this toxics ignorance gap, NASEM prepared the first of the two reports, entitled “Toxicity Testing in the 21st Century: A Vision and a Strategy.” The report, usually known for short as TT21C, is a detailed critique of the current animal-centered toxicity testing paradigm. It sets out a plan for how the toxicity testing system should change to meet data and decisionmaking needs. It recommends that the current testing paradigm be changed in a way that allows for the development of improved predictive, human biology-based science that supersedes what can be gleaned from animal studies.

TT21C reached several conclusions. First, the report pointed out that animal studies are time consuming and expensive. Second, it noted that animal studies are not always predictive of human responses. Third, looking ahead, it called for toxicity testing for regulatory purposes to be built around the use of in vitro systems that use human cells and tissues. Last, it outlined how and why systems biology—a scientific approach that integrates information from cells, tissues, organs, and population studies—and pathways of toxicity will provide better science for regulatory decisionmaking.

Since the report’s release, EPA has expended considerable effort to make its vision and strategy a reality. These efforts have been spurred, in part, by changes in the law, including provisions in the amended TSCA that explicitly address new methods and encourage the use of non-animal alternatives when scientifically appropriate. New TSCA also requires the agency to publish a work plan to reduce the use of vertebrate animal testing and to increase the use of what the agency calls “new approach methodologies,” or NAMs, which it defines as technologies, methods, approaches, or combinations of these techniques that can provide information on chemical hazard and risk assessment to avoid the use of live-animal testing. The EPA also publishes a list of available NAMs. At the time this article was written that list contained about 30 tests across a variety of endpoints such as skin and eye damage, and a few for endocrine disruption. These methods continue to be developed and this list will expand.

The TT21C report, the changes incorporated into TSCA in 2016, and new in vitro and in silico technologies are catalysts to increase the pace of change in the practice of regulatory toxicology. A 2020 review paper by Daniel Krewski, the former chair of the TT21C committee, and several committee members concluded that “overall, progress on the 20-year transition plan laid out . . . in 2007 has been substantial. Importantly, government agencies within the United States and internationally are beginning to incorporate the new approach methodologies envisaged in the original TT21C vision into regulatory practice.”

The 2016 TSCA rewrite contains provisions that specifically encourage the use of non-animal alternatives in place of vertebrate animals; in conjunction with improvements in scientific technique, these developments have led to progress. Because of TT21C and the provisions in the amended TSCA law, EPA has a good start replacing vertebrate animals in its risk assessments. However, as the next NASEM study demonstrates, considerable work remains before we can replace animals completely.

Since the beginning of the COVID-19 pandemic, the scientific community has complained that there is a shortage of non-human primates, or NHPs, for research. The United States uses about 70,000 NHPs per year for brain, infectious diseases, and aging studies. China stopped exporting NHPs in 2020, which contributed to a shortage in the United States, which at that time, imported 60 percent of its NHPs from China. In particular, the United States experienced a 20 percent drop in imports of long-tailed macaques, a species used by private industry for drug and vaccine research. The second of the two NASEM reports, this one commissioned by the National Institutes of Health, was published in 2023. “Nonhuman Primates in Biomedical Research: State of the Science and Future Needs,” points out that “although nonhuman primates (NHPs) represent a small proportion—an estimated one-half of 1 percent—of the animals used in biomedical research, they remain important animal models due to their similarities to humans with respect to genetic makeup, anatomy, physiology, and behavior. Remarkable biomedical breakthroughs, including successful treatments for Parkinson’s and sickle cell disease, drugs to prevent transplant rejection, and vaccines for numerous public health threats, have been enabled by research using NHP models. However, a worsening shortage of NHPs, exacerbated by the COVID-19 pandemic and recent restrictions on their exportation and transportation, has had negative impacts on biomedical research necessary for both public health and national security.”

On November 16, 2022, the Department of Justice announced an indictment of eight people for smuggling long-tailed macaques into the United States, and for conspiracy to violate the Lacey Act and Endangered Species Act. Two of those who were indicted for smuggling and conspiracy were officials of the Cambodian Forestry Administration. Long-tailed macaques are an endangered species. The indicted persons allegedly removed wild, long-tailed macaques from national parks and other protected areas in Cambodia, then took the macaques to breeding facilities where they were provided fraudulent export permits, which falsely stated that the macaques were bred in captivity. As a result, Cambodia stopped exporting NHPs, which is expected to significantly impair drug development in the United States. In fact, as of September 2022, Cambodia accounted for 60 percent of American imports of NHPs. Additionally, Inotiv, the United States’ largest commercial monkey dealer, decided to halt sales of all Cambodian NHPs in its possession in the United States. Interestingly, in a report filed with the Securities and Exchange Commission, Inotiv stated that, following January 13, 2023, it “has shipped a select number of its Cambodian NHP inventory; however, [Inotiv] is not currently shipping Cambodian NHPs at the same volumes that it was prior to the [indictment].” In that same report, Inotiv expressed that it “expects to establish new procedures before it will resume Cambodian NHP imports.”

Further contributing to the NHP research shortage is the issue of transportation. Since 2000, an increasing number of airlines have refused to transport NHPs for use in research. This action was taken primarily in response to concerns by the public. In 2018, the National Association of Biomedical Research filed a complaint against the airlines with the Department of Transportation. As of 2022, DOT has not made a ruling on this case and many believe that the department has issued what amounts to a rejection of the complaint due to their prolonged silence. Consequently, available NHPs for research in the United States have declined drastically in recent years. Considering the airline transport factor along with the reality that Chinese and Cambodian NHPs are unavailable to the United States, it is important that the federal government treat the shortage as an opportunity to advocate for and dramatically increase funding for non-animal test methods. Funding directed this way will enable the United States to replace NHPs more rapidly in research and drug development.

To answer questions about the need for, and value of, NHPs in research, Congress directed NIH to sponsor a NASEM study. The 2023 report explores the state of biomedical research using NHPs and their future roles in NIH-supported research. It also assessed the research and development status of new approach methodologies, such as in vitro and in silico models, and their potential role in reducing the use of NHPs.

The new report reached the following conclusions. Research using NHPs has contributed to numerous public health advances; continued research using NHPs is vital to the nation’s ability to respond to public health emergencies. More NHPs will be needed in the future. The shortage has gotten worse, and without financial and other support, the ability of the NIH to respond to public health emergencies will be severely limited. Based on the current state of NAMs, the previously mentioned new approach methodologies, there are no alternative approaches that can totally replace NHPs, although there are select NAMs that can replicate certain complex biological functions, and it is reasonable to be optimistic that in future years it might be possible to replace NHPs. To reduce reliance on the animals, additional resources are needed, along with a collaborative effort among those developing NAMs and those who currently utilize NHPs, and a plan and process to validate these new NAMs.

This report does endorse a strategy to acquire more NHPs for scientific research. While that is where much of the news reporting has focused, there are important lessons about the need to build NAMs that can replace the use of NHPs. The report makes it clear that NIH and researchers should not view the shortage of NHPs available for research as a problem that needs to be fixed simply by acquiring more NHPs. As discussed, there are substantial roadblocks to acquiring a greater supply, and several of them, such as issues associated with transportation from one nation to another, are beyond the control of the scientific community. Therefore, it makes sense to view the NHP shortage through a different lens—as an opportunity to enable research by heavily encouraging the use and development of, and dramatically increasing funding for, human-relevant, non-animal test methods.

Despite its general support for the use of NHPs, one of the conclusions reached in the NIH report was that even though there are currently no alternatives that can fully replace NHPs, there is reason to be optimistic because new approach methodologies continue to advance rapidly. In addition, the study concluded that “development and validation of new approach methodologies (in vitro and in silico model systems) is critically important to support further advances in biomedical research. This may reduce the need for nonhuman primate (NHP) models in the future, and/or enhance their utility. Additionally, this may help to mitigate shortages in NHP supply and the high cost of NHP research.”

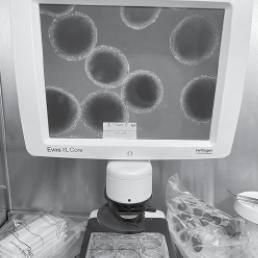

As non-animal test methods such as organs-on-a-chip and organoids are developed, refined, mass-produced, and become affordable for U.S.-based laboratories to use, American research can progress without being hampered by a lack of NHPs, or a reliance on the countries that supply them. Furthermore, compared to traditional regulatory toxicology tests, studies indicate that non-animal test methods are cheaper, can be performed faster, are reliable, and do not subject animals to confinement and suffering. Moreover, the shortage of NHPs should induce the federal government to fund and promote the use of non-animal test methods that are available to laboratories, including stem cells, in silico models, and micro dosing.

As noted above, the NIH report states that NHPs “remain important animal models” and that the NHP shortage “has had negative impacts on biomedical research necessary for both public health and national security.” While these problems could be addressed in the short term by increasing the NHP supply, a long-term solution must focus on the underlying science and on how to obtain comparable information without NHPs. As this report notes, NAMs are not sufficiently available to completely replace animal models. To reduce reliance on NHPs, additional resources are needed including a process for NAM validation.

Taken together, these reports show a way to improve regulatory toxicology, increase scientific knowledge, and substantially reduce animal use. The TT21C report created a movement at EPA toward the discovery and deployment of NAMs in the TSCA program. During the past 16 years, progress has been made in moving away from vertebrate animal testing toward a regulatory toxicology regime that is more human-centric, faster, more reliable, and more potentially useful for EPA risk assessments. Provisions added to TSCA in 2016 catalyzed EPA’s movement toward NAMs by requiring an agency workplan and a list of acceptable NAMs. On the other hand, the NIH report demonstrates some of the barriers and challenges that remain in completing the transition to the vision outlined in TT21C. While the NIH report endorses the value of NHP research and recommends increasing the supply for science, its discussion and analysis of NAMs should not be overlooked. Although replacing NHPs might not be possible in the short term, it should be a long-term goal. To achieve it, federal government agencies and Congress should consider taking the following actions.

First, there must be an investment of resources in the development of NAMs, especially for more complex health endpoints. EPA’s NAMs list is populated mostly with tests for simpler biological endpoints. While important, the real challenge will be to develop NAMs for complex endpoints such as reproductive and developmental impacts, cancer, and neurological diseases. Models for some of these endpoints—such as brain organoids—are in use now and have provided important insights. For example, a brain organoid model was used to demonstrate that COVID-19 could infect human brain tissue. This is consistent with the current state of the science, as described in the NIH report, which notes that NAMs have provided useful information but cannot yet fully replace NHPs in research. One possible way forward is to dedicate a small portion of the NIH budget to NAMs. A 2 percent solution would draw researchers to the field. Training on how to use NAMs should also be a target of this funding.

Second, Congress should consider applying the TSCA NAMs approach to other statutes so that more federal agencies are required to mount a NAMs program. While such a program could take different forms depending on the agency and its mandates, two key federal organizations should be prioritized. As the dominant research funder in the nation, NIH should be required to develop a strategic plan for NAMs. Although NIH is not primarily a regulatory body, its funding supports thousands of researchers throughout the country, including tens of thousands of students. Part of its strategic plan could be a list of NAMs used by those it funds, and an assessment and description of these NAMs. Bills have been introduced in Congress that seek to prod NIH on alternatives. For example, in 2021 Representative Vern Buchanan (R-FL) and the late Alcee Hastings (D-FL) introduced the Humane Research and Testing Act. Among other things, this bill would have established a new institute dedicated to NAMs housed under NIH. Another important agency is the Food and Drug Administration, which could play a much larger role in the transition to NAMs. FDA published a predictive toxicology roadmap in 2020 that calls out NAMs as promising new technologies. Last year, new legislation was enacted called the FDA Modernization Act 2.0. This law removed a requirement for animal testing in new drug protocols and replaced it with language that allowed FDA to consider the best science. Despite these changes, though, the FDA has yet to accept data from a non-animal alternative in the drug development process. Congress could require FDA to establish a work plan for alternatives to accelerate their use in the agency’s regulatory processes.

Third, federal agencies must clearly explain how NAMs should be validated and, for regulatory agencies, must outline the steps to be taken so that NAMs can be listed as satisfactory-for-use in decisionmaking. The NIH report touches on some of these issues. In chapter 4, the report explains the concepts of context-of-use, qualification, and validation. Validation is of particular importance. It is explained in the NIH report as “the process by which the reliability and relevance of a technology or approach is established for a defined purpose using specific criteria,” which is adapted from the definition of validation provided by the Organization for Economic Cooperation and Development. Once validated, a methodology is deemed to have been blessed by an agency as one that produces robust and reliable data. This conceptual explanation needs to be transformed by regulatory agencies into a procedure that NAM developers can follow. A method reaches regulatory acceptance if it is routinely used by the agency for decisionmaking. Without clarity on how these concepts are operationalized, however, it will be extremely difficult to design NAMs for decisionmaking.

Regulatory toxicology 1.0 emerged after it became clear that the nation needed a way to assess the dangers of exposure to chemicals and radiation. It served its purpose well in the 20th century, but it is now time to recognize that the environmental protection challenges of the 21st century require more. Regulatory toxicology 2.0 is evolving. It is centered on human biology and uses far fewer non-human animals. Further it is potentially less expensive, faster, and has the promise of being more predictive and protective of human and environmental health. The two NASEM reports discussed here capture key features of 21st century regulatory toxicology, as well as demonstrate current gaps and how to address them.